Apple’s iPhone voice-text writes ‘Trump’ when the user says ‘racist’

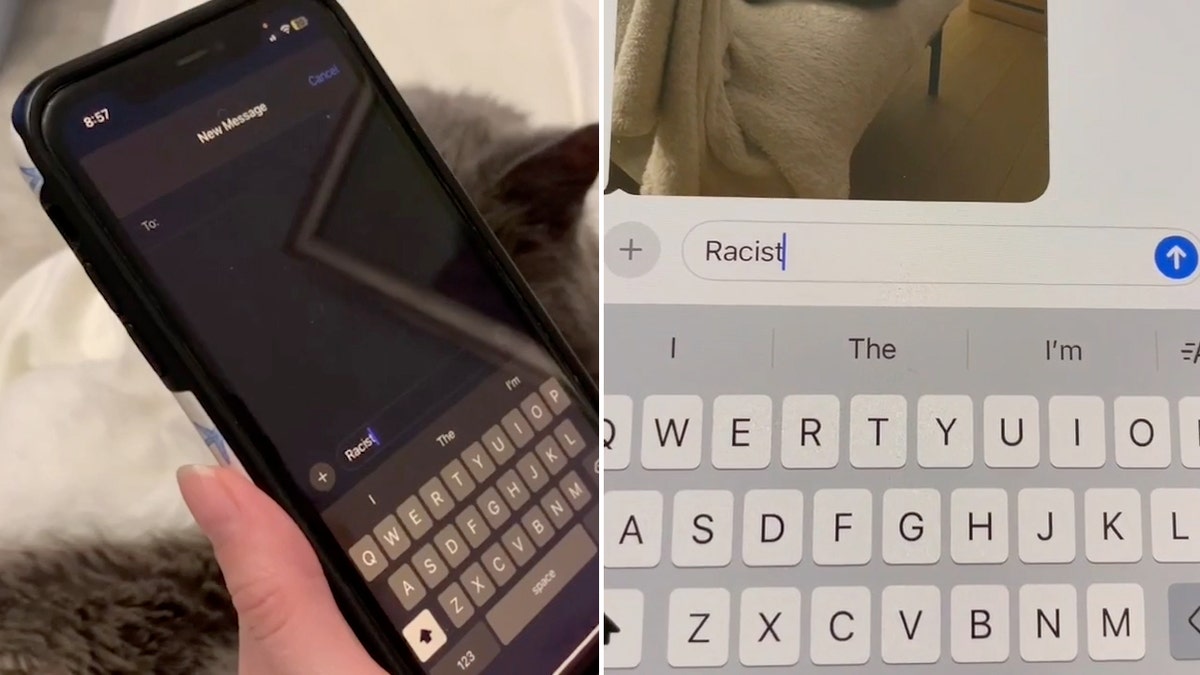

Apple’s iPhone The feature of the voice to text causes controversy after the viral video tictok has shown that the user has spoken the word “racist”, which initially appeared as “Trump” before returning to “racist”.

Fox News Digital could repeat the problem several times. A feature of the dictatum of voice to the text was observed briefly by “Trump” when the user said “racist” before changing quickly to “racist”-as well as in the viral Tictok video.

However, “Trump” did not appear every time the user said “racist.”

A feature of voice to text also wrote words such as “Reinhold” and “You” when the user said “racist”. Most of the time, the feature wrote exactly “Rasist”.

Apple’s iPhone feature seems to say “Trump” occasionally when the user says “racist”. (Fox News)

Apple spokesman said on Tuesday that the company was dealing with this problem.

“We are aware of the problem with the model recognition of speech that dictatorships, and as soon as possible, take out a repair,” a spokesman said.

Apple says that speech recognition models that can temporarily display words with some phonetic overlap, before landing on the correct word. The bug affects other words with a “r” consonant when he is dictated, Apple says.

Apple reveals historical investments in the amount of $ 500 billion to US production

This is not the first time the technology has encouraged controversy about what is understood as slight against President Donald Trump.

In September, the video became viral showing virtual assistant Amazon Alex, explaining the reasons for voting for then President Kamal Harris, while refusing to provide similar answers for Trump.

Representatives of the network trade giant sent the staff of the House of Justice on the incident and explained that Alex uses a pre -programmed manual reset that created Amazon’s information team to respond to certain users’ instructions, according to a source familiar with Brifing.

For example, Alexa would tell users who were looking for a reason to vote for Trump or then President Joe Biden, “I cannot provide content that promotes this specific political party or candidate.”

Before posting a viral video, Amazon only programmed manually canceling for Biden and Trump, he failed to add Harris because very few users asked Alex about the reasons for her to vote for her, the source said.

Tail. Jim Jordan ask for Amazon’s briefing because of Alex’s Trump censorship

Virtual assistant Amazon Alex has previously explained the reasons for voting for then President Kamal Harris, refusing to provide similar answers for the then President of Fort Donald Trump. (Amazon)

Amazon has become aware of the problem with Alex’s Pro-Harris answers within an hour from the video published on X and Viral. The company solved a problem with manually canceling For such questions about Harris within two hours of the video, according to the source.

Before the repair was arranged, Fox News Digital encouraged Alex to ask the reasons for voting for Harris and received the answer, saying that “she is a female in color with a comprehensive plane to resolve racial injustice and inequality throughout the country.”

Trump, Mošus supports Viveka Ramaswamy for Governor Ohi

Source said Amazon apologized for Alex’s view political bias At the briefing, he said that although he had a policy that aims to prevent Alex to “have political opinion” or “bias for or against a particular party or a particular candidate … Obviously we are here today, because we have not met this lane incident in this.”

Click here to get the Fox News app

The technological giant has since revised its system and has manually reset for all candidates and numerous electoral queries. Earlier Alexa had only manual reset Presidential candidates.

Fox Business’ Eric Revell, Hillary Vaugh and Chase Williams contributed to this report.