Google Removes a pledge not to use AI for weapons, supervision

Sundar Pichai, CEO of Alphabet Inc., during the Stanford Business Forum, Government and Society at Stanford in Stanford, California, April 3, 2024.

Justin Sullivan | Getty Images

Google He removed the promise that he would refrain from using AI for potentially harmful application, such as weapons and surveillance, according to the updated “AI principles” of the company.

Previous version The company’s AI principle said that the company would not follow “weapons or other technologies whose main purpose or implementation is to cause or directly facilitate people of people,” and “technologies that collect or use information that violates internationally accepted standards.”

These goals are no longer displayed on his principles AI website.

“There is a global competition for the leadership of AI in the increasingly complex geopolitical landscape,” the Tuesday reports blog blog Written by Demis Hassabis, the Google Deepmind CEO. “We believe that democracy should lead to the development of AI, guided by fundamental values such as freedom, equality and respect for human rights.”

Updated company principles reflect Google’s increasing ambitions to offer their AI technology and services of multiple users and clients, which included governments. The change is also in line with the increase in the rhetoric of the silicon valley of the winning AI race between the US and China, with Palantir’s Cto Shyam Sankar on Monday that “it will be the effort of the whole of nations that will be the overall effort that will be overall The effort extended far beyond the DOD to beat us as a nation. “

The previous version of the AI principle of the company said Google “would” consider the wide range of social and economic factors “. New AI allegations principles will “continue where we believe that the overall probable benefits significantly exceed predictable risks and disadvantages”.

In his Tuesday, Google said that “he would remain in accordance with the widely accepted principles of international law and human rights – always assessing specific work, carefully assessing whether benefits significantly outweigh the potential risks.”

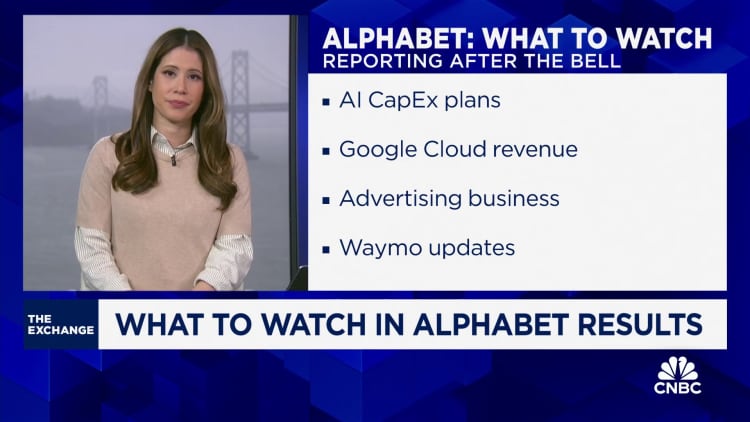

The new principles of AI were First reported the Washington Post Tuesday, on the eve of Google’s The earnings of the fourth quarter. The results of the company missed the expectations of the Wall Street revenue and reduced the shares by as many as 9% in the after -time trading.

Hundreds of protesters, including Google Workers, gather in front of Google’s San Francisco Office and on Thursday night, they shut down traffic to those Market Street Block, demanding the end of their work with the Israeli government and protest for the protest on Israeli attacks on Gaza, San Francisco, California, California , The United States on December 14, 2023.

Anadolu | Anadolu | Getty Images

Google has established its AI principles in 2018 refusing to restore The Government’s contract called Project Maven, who helped the Government analyze and interpret the drones with artificial intelligence. Before completing the agreement, several thousand employees signed a petition against the contract, and tens filed a resignation in opposition to Google’s involvement. The company also gave up From the bids for the Pentagon Agreement in the amount of $ 10 billion in part because the company “couldn’t be sure” that it would match with AI principles, he said at the time.

By drawing his AI technology to clients, Pichai’s leader team aggressively followed the Federal Government contracts, which caused increased strain In some areas within Google’s open workforce.

“We believe that companies, governments and organizations that share these values should work together to create AI that protects people, promotes global growth and supports national security,” the Google blog said on Tuesday.

Google last year off More than 50 employees after a series protests Against Project Nimbus, a joint contract with Amazon of $ 1.2 billion, which provides the Israeli government and the military with cloud and AI services. The executives have repeatedly said the contract does not violate any company “Ai principles.”

However, documents and reports showed that the company’s agreement was allowed to give Israel Ai tools This included the categorization of the image, the monitoring of objects as well as supplies for state -owned weapons manufacturers. New York Times in December reported Four months before they reported Nimbus, Google officials expressed concern that signing the agreement would harm its reputation and that “Google Cloud Services could be used or related to human rights violation.”

In the meantime, the company has broken internal discussions on geopolitical conflicts such as Gaza war.

In September, Google announced updated guidelines for its memegen internal forum that further limited political discussions on geopolitical content, international relations, military conflicts, economic actions and territorial disputes, according to the internal documents he examined CNBC at that time.

Google did not immediately respond to a comment request.