Meta, Facebook to drop fact-checkers: What does this mean for social media? | Social Media News

Meta, the owner of Facebook and other social media platforms, will implement major changes to its content moderation policies, founder Mark Zuckerberg announced this week in a video titled, “More speech and fewer mistakes”.

Among the changes, Meta’s use of fact-checking organisations will end abolished and the group will switch to a system of community notes – similar to those used by the X platform – instead.

The move, revealed on Tuesday, comes as tech executives brace for the arrival of incoming US President Donald Trump, whose right-wing supporters have long decried online content moderation as a tool of censorship.

So why is this happening now and will it lead to more misinformation?

In a video posted to social media platforms, Zuckerberg explained that Meta plans to scrap fact-checking in favour of a new system of community notes which users can use to identify posts of others that may have misleading or falsified information. Meta plans to roll this community note system out in the next coming months.

Zuckerberg said fact-checking organisations had proved to be “biased” when it came to selecting content to moderate and added that he wanted to ensure free speech on all platforms. “It’s time to get back to our roots around free expression,” he wrote in the post with the five-minute video.

“Our system attached real consequences in the form of intrusive labels and reduced distribution. A programme intended to inform too often became a tool to censor.”

While this policy will extend to all subject matters, Zuckerberg singled out the issues of “gender and immigration” in particular.

Meta’s upcoming modifications will take effect across its trio of major social media platforms: Facebook, Instagram and Threads, which are used by more than 3 billion people worldwide.

Is Meta also moving operations to Texas? Why?

Meta plans to relocate its content moderation teams from California to Texas, hoping the move will “help us build trust” while having “less concern about the bias of our teams”. Some experts see the move as politically motivated and could have negative implications on how political content is handled on Meta’s platforms.

“This decision to move to Texas is born out of both some practicality and also some political motivation,” stated Samuel Woolley, the founder and former director of propaganda research at the University of Texas at Austin’s Center for Media Engagement who spoke to digital newsgroup The Texas Tribune.

“The perception of California in the United States and among those in the incoming [presidential] administration is very different than the perception of Texas,” he added.

Zuckerberg appears to be following in the footsteps of Musk, who shifted Tesla’s headquarters to Austin, Texas in 2021. In an X post in July, Musk also expressed interest in moving his other ventures, X and SpaceX, from California to Texas, citing Governor Gavin Newsom’s recently enacted SAFETY Act which prevents schools from mandating teachers to notify parents when a student requests to be recognised by a “gender identity” that differs from their sex.

And 𝕏 HQ will move to Austin https://t.co/LUDfLEsztj

— Elon Musk (@elonmusk) July 16, 2024

How has content moderation on Meta platforms worked until now?

Presently, social media platforms like Facebook and Threads use third-party fact-checking organisations to verify the authenticity and accuracy of content posted to each platform.

These organisations evaluate content and flag misinformation for further scrutiny. When a fact-checker determines a piece of content to be false, Meta will take action to substantially limit that piece of content’s reach, ensuring that it reaches a significantly smaller audience. However, third-party fact-checkers do not have the authority to delete content, suspend accounts or remove pages from the platform. Only Meta can remove content from its platforms that violates its Community Standards and Ads policies. This includes, but is not limited to, hate speech, fraudulent accounts and terrorist-related material.

Since 2016, Meta has worked with more than 90 fact-checking organisations in more than 60 languages around the world. Some of the major fact-checking organisations it works with include PolitiFact, Check Your Fact, FactCheck.org and AFP Fact Check. Some partnerships with fact-checking organisations go back nearly 10 years, with PolitiFact being one of the earliest to join forces with Meta in 2016.

How will the new moderation work?

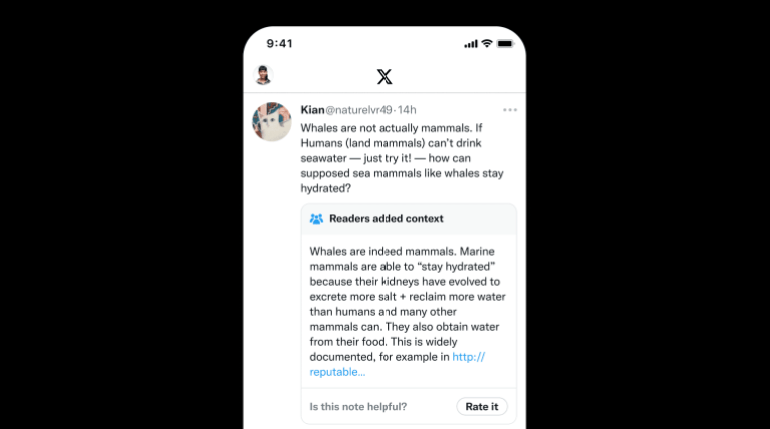

Similar to X, formerly known as Twitter before it was bought for $44bn by billionaire Elon Musk in 2022, Meta will use Community Notes to moderate content instead of fact-checkers.

X’s Community Notes, previously known as BirdWatch, was piloted in 2021 and gained significant traction in 2023 as a feature designed to identify and highlight potentially misleading information on the platform.

Community Notes appear in boxes labelled “Readers added context” below posts on X which have been identified as potentially misleading or inaccurate. A Community Note typically provides a correction or clarification, frequently supported by a hyperlink to a reputable online source which can verify the information provided.

These annotations are crafted by eligible platform users who have opted into the programme. As long as a user has no X violations on their account since January 2023, has a verified phone number provided by a legitimate mobile carrier and their platform account is at least six months old, they are eligible to participate.

Once approved by X as a Notes contributor, participants may rate other Community Notes as “Helpful or “Not Helpful”. Contributors receive a “Rating Impact” score that reflects the frequency with which their ratings influence notes that achieve “Helpful” or “Not Helpful” status. A Rating Impact score of 5 allows a contributor to progress to the next level, and write Contributor Notes for X posts as well as rate them.

Community Notes which receive five or more ratings undergo algorithmic evaluation. The algorithm categorises each note as either “Helpful”, “Not Helpful” or “Needs more ratings”. At this point, the Notes are not yet visible to X users, only contributors.

Only those Notes which receive a final “Helpful” status from the algorithm are shown to all X users beneath the corresponding post.

Although Meta has not outlined exactly how its community notes would work, Zuckerberg stated in his video that they would be similar to X’s community note system.

There is debate about how well Community Notes work on X, which has 600 million users.

Yoel Roth, the former head of Twitter’s trust and safety department stated in a BlueSky post: “Genuinely baffled by the unempirical assertion that Community Notes ‘works.’ Does it? How do Meta know? The best available research is pretty mixed on this point. And as they go all-in on an unproven concept, will Meta commit to publicly releasing data so people can actually study this?”

However, some research into the effectiveness of Community Notes has been carried out.

In October 2024, the University of Illinois published a working paper about X’s Community Notes feature, led by assistant professor of business administration Yang Gao. In general, his study results were positive.

“We find that receiving a displayed community note increases the likelihood of tweet retraction, thus underscoring the promise of crowdchecking. Our mechanism tests reveal that this positive effect mainly stems from consideration of users who had actively interacted with the misinformation (ie, observed influence), rather than of users who might have passively encountered or would encounter the misinformation (ie, presumed influence),” Gao explained in the paper.

Another research paper led by University of Luxembourg researchers published in April 2024 in Open Science Framework (OSF) – which allows researchers to share their academic papers – found the use of Community Notes reduced the spread of misleading posts by an average of 61.4 percent.

However, the research paper added, “Our findings also suggest that Community Notes might be too slow to intervene in the early (and most viral) stage of the diffusion.”

A recent analysis of Notes mentioning election claims between January 1 and August 25, 2024, by The Center for Countering Digital Hate (CCDH) also revealed limits to the effectiveness of the X Community Notes feature.

The researchers examined 283 posts containing election-related claims that independent fact-checking organisations had determined to be false or misleading. In its analysis, it focused exclusively on posts which had received at least one proposed note from Community Notes contributors. It found that 209 out of 283 “misleading” posts in its sample were not being shown to all X users – “equivalent to 74 percent,” the report stated – because they had not reached the ranking of “helpful” despite being accurate.

It added that the delay in moving a post to “helpful” status contributed to this.

According to the Washington Post, which did a separate analysis of the data, only 7.4 percent of Community Notes proposed in 2024 related to the election were ever shown and the number dropped to 5.7 percent in October.

How have fact-checking organisations reacted to Meta’s decision to switch to Community Notes?

Some fact-checking organisations have criticised the move, saying it is unnecessary and politically driven.

“Facts are not censorship. Fact-checkers never censored anything. And Meta always held the cards,” said Neil Brown, president of the Poynter Institute, the journalism nonprofit that owns PolitiFact in a public statement. “It’s time to quit invoking inflammatory and false language in describing the role of journalists and fact-checking.”

“We’ve learned the news as everyone has today. It’s a hard hit for the fact-checking community and journalism. We’re assessing the situation,” the news agency AFP, which operates AFP Fact Check, said in a statement.

Who else has criticised the move and why?

Some experts in social media have cautioned that the change may open the door to an increase in misinformation appearing on Meta platforms.

“I suspect we will see a rise in false and misleading information around a number of topics, as there will be an incentive for those who want to spread that kind of content,” Claire Wardle, an associate professor in communication at Cornell University told Vox, a digital media company and news website.

Others believe Meta is deliberately aiming to placate the right wing as a second Trump presidency looms and is opening the door for more MAGA-centred content.

Lina Khan, who chairs the Federal Trade Commission, expressed concern during a CNBC interview on Tuesday that Meta executives may be pursuing favourable treatment from the Trump administration. She suggested that the company might be attempting to secure a “sweetheart deal” with the White House.

“I think that Mark Zuckerberg is trying to follow in Elon’s footsteps, which means that actually, they’re going to use this guise of free speech to actually suppress critics of Trump and critics of themselves,” Representative for New York Alexandria Ocasio-Cortez told Business Insider.

“There’s been a shift rightward in terms of attitudes toward free speech in Silicon Valley and perhaps this decision is part of that,” Sol Messing, a research associate professor at New York University’s Center for Social Media and Politics and a former research scientist at Facebook, told ABC News.

Nate Silver, founder of FiveThirtyEight and a political pollster who now runs the Silver Bulletin blog on Substack, gave his take on the change in a recent blog post: “As someone who tries to be non-hypocritically pro-free speech, my inclination is to welcome the changes. But Zuck’s motivations are questionable: there’s no doubt that Meta and other media companies are under explicit and intense political pressure from the incoming Trump administration. So perhaps it’s the right move for the wrong reasons.”

Who has welcomed the move?

Some big names in social media have actively welcomed Meta’s announcement.

Elon Musk, CEO of Tesla and who will lead Trump’s Department of Government Efficiency (DOGE) with former Republican presidential hopeful Vivek Ramaswamy stated, “This is cool” on a recent X post.

This is cool pic.twitter.com/kUkrvu6YKY

— Elon Musk (@elonmusk) January 7, 2025

Cenk Yuger, founder of the Young Turks, a major left-leaning digital news channel welcomed the change in an X post. “He actually mentioned something that is not getting a lot of attention. He said, basically we are done with relying on legacy media,” he wrote. “That’s who the ‘fact checkers’ were in the past. Legacy media has an enormous agenda. They are not at all objective.”

.@cenkuygur points out something many people are missing in Mark Zuckerberg’s statement on Meta’s “fact checking.” https://t.co/LDGdTcQhkD pic.twitter.com/JbsGKtumMd

— The Young Turks (@TheYoungTurks) January 9, 2025

The move is “a great step toward the decentralisation of information and the end to the control legacy media has had on the prevailing narrative”, said Christopher Townsend, an Air Force vet and conservative rapper with more 300,000 Instagram who spoke to Business Insider.

“It seems like Meta is finally taking a page from Elon Musk’s playbook & letting Americans make decisions for themselves. It’s about time Meta owned up to censoring Americans,” Republican Representative Randy Weber of Texas who spoke to Business Insider

President-elect Donald Trump appeared to believe he had played a role in Meta’s revised content moderation policy when he spoke at a news conference at Mar-a-Lago on Tuesday. Asked if his previous criticism of the company had prompted the change, Trump’s response was succinct: “Probably.”

How will Meta’s change impact regions outside the US?

Although the initial rollout of the new feature will take place in the next couple of months in the United States only, Zuckerberg also referred to other regions and countries in his video, including Europe, China and Latin America.

“Europe has an ever-increasing number of laws, institutionalising censorship and making it difficult to build anything innovative there,” he said. “Latin American countries have secret courts that can order companies to quietly take things down. China has censored our apps from even working in the country. The only way that we can push back on this global trend is with the support of the US government, and that’s why it’s been so difficult over the past four years when even the US government has pushed for censorship.”

In a recent statement, the EU rejected Meta’s claims that it has engaged in any form of censorship under its digital regulation.

“We absolutely refute any claims of censorship on our side,” European Commission spokesperson Paula Pinho told reporters in Brussels.